Before you begin any statistics project, you have to take a step to come back and inspect the dataset earlier than doing something with it. The Pandas library is provided with a number of useful capabilities for this very purpose, and value_counts is one in every of them. Pandas value_counts returns an object containing counts of distinct values in a pandas dataframe in sorted order.

However, most customers are likely to miss that this operate will be utilized not solely with the default parameters. If you would like a extra guided expertise when constructing a majority of these expressions, you need to use the brand new visible calculation editor. In some profiles, akin to Visualization and Labeling, apps solely request the info attributes required for rendering every function or label. Some expressions dynamically reference area names with variables in preference to string literals. This makes it onerous for rendering and labeling engines to detect fields required for rendering. This operate lets you explicitly point out required fields as a list.

You may additionally request all or a subset of fields applying a wildcard. Because expressions execute on a per function basis, the wildcard must be used with caution, primarily in layers containing many features. Requesting an excessive amount of facts may end up in very poor app performance. By wrapping "on-line" scikit-learn estimators with this class, they grow to be a vaex pipeline object. Thus, they could take full benefit of the serialization and pipeline system of vaex. While the underlying estimator have to name the.partial_fit method, this class comprises the usual .fit method, and the remainder occurs behind the scenes.

One can even iterate over the info a variety of occasions , and optionally shuffle every batch earlier than it really is shipped to the estimator. The predict methodology returns a numpy array, whereas the transformmethod provides the prediction as a digital column to a vaex DataFrame. Returns NULL if the end result comprises no rows, or within the occasion of an error. An error happens if any key identify is NULL or the variety of arguments is not really equal to 2. A pivot desk consists of counts, sums, or different aggregations derived from a desk of data.

You could have used this function in spreadsheets, the place you'd pick the rows and columns to combination on, and the values for these rows and columns. It permits us to summarize files as grouped by totally different values, consisting of values in categorical columns. Note that when the aggregation operations are complete, calling the GroupBy object with a brand new set of aggregations will yield no effect. You should generate a brand new GroupBy object with a view to use a brand new aggregation on it. In addition, particular aggregations are solely outlined for numerical or categorical columns. An error will probably be thrown for calling aggregation on the incorrect files types.

If all of the gadgets within the array are the identical type, an applicable type perform shall be used. If they're distinct types, the gadgets shall be changed to strings. If the array comprises objects, and no consumer outlined perform is provided, no type will happen.

Indicates whether or not all the weather in a given array cross a check from the offered function. Returns true if the perform returns true for all gadgets within the enter array. Crosstab¶Computes a pair-wise frequency desk of the given columns. The variety of distinct values for every column must be below 1e4.

The first column of every row would be the distinct values of col1 and the column names would be the distinct values of col2. Pairs which haven't any occurrences could have zero as their counts.DataFrame.crosstab() and DataFrameStatFunctions.crosstab() are aliases. For example,df.groupby(['Courses','Duration'])['Fee'].count()does group onCoursesandDurationcolumn and eventually calculates the count. Tests whether or not not among the weather in a given array move a check from the supplied function.

Returns true if the testFunction returns false for all gadgets within the enter array. Tests even if any of the weather in a given array move a experiment from the supplied function. Returns true if the perform returns true for a minimum of one merchandise within the enter array. You can not experiment them as NULL values in subscribe to situations or the WHERE clause to find out which rows to select. For example, you can't add WHERE product IS NULL to the question to get rid of from the output all however the super-aggregate rows.

Aggregates a end result set as a single JSON array whose components include the rows. The carry out acts on a column or an expression that evaluates to a single value. The BIT_AND(), BIT_OR(), and BIT_XOR() combination features carry out bit operations. Prior to MySQL 8.0, bit features and operators required BIGINT (64-bit integer) arguments and returned BIGINT values, so that they had a most variety of sixty four bits. Non-BIGINT arguments have been transformed to BIGINT in advance of performing the operation and truncation might occur.

When used with APPROXIMATE, a COUNT perform makes use of a HyperLogLog algorithm to approximate the variety of distinct non-NULL values in a column or expression. Queries that use the APPROXIMATE key-phrase run a lot faster, with a low relative error of spherical 2%. Approximation is warranted for queries that return numerous distinct values, within the hundreds of thousands or extra per query, or per group, if there's a gaggle by clause. For smaller units of distinct values, within the thousands, approximation could be slower than a exact count. Just like when making a degree of element calculation, you should use the visible calculation editor to construct a rank calculation. Select the fields you should contain within the calculation, then pick out the fields you should use to rank the rows and the kind of rank you should calculate.

A preview of the outcomes is proven within the left pane so you'll see the outcomes of your alternatives as you go. Use pandas DataFrame.groupby() to group the rows by column and use count() process to get the matter for every group by ignoring None and Nan values. The under instance does the grouping on Courses column and calculates matter how repeatedly every worth is present. In order to question MongoDB with SQL, Studio 3T helps many SQL-related expressions, functions, and techniques to enter a query.

This tutorial makes use of the info set Customers for instance examples. Columns laid out in subset that don't have matching information kind are ignored. For example, if worth is a string, and subset accommodates a non-string column, then the non-string column is just ignored. For dialogue about argument analysis and end result varieties for bit operations, see the introductory dialogue in Section 12.13, "Bit Functions and Operators".

It is simply not permissible to incorporate column names in a SELECT clause that aren't referenced within the GROUP BY clause. The solely column names that may be displayed, together with combination functions, could be listed within the GROUP BY clause. Since ENAME is simply not included within the GROUP BYclause, an error message results. As an extension Texis additionally permits the GROUP BY clause to contain expressions in preference to simply column names. This ought to be used with caution, and the identical expression ought to be utilized within the SELECT as within the GROUP BY clause.

If you choose SALARY, and GROUP BY SALARY/1000 you'll notice one pattern wage from the matching group. Transformation strategies return a DataFrame with the identical type and indices because the original, however with completely different values. With each aggregation and filter methods, the ensuing DataFrame will frequently be smaller in measurement than the enter DataFrame. This isn't true of a transformation, which transforms particular person values themselves however retains the type of the unique DataFrame. To examine it into reminiscence with the correct dyptes, you would like a helper operate to parse the timestamp column. This is simply because it's expressed because the variety of milliseconds simply because the Unix epoch, instead of fractional seconds, which is the convention.

The output isn't significantly valuable for us, as every of our 15 rows has a worth for every column. However, this may be very helpful the place your info set is lacking numerous values. Using the matter approach can assist to establish columns which are incomplete. From there, it is easy to resolve whether or not to exclude the columns out of your processing or to offer default values the place necessary. Array components which don't move the callbackFn check are skipped, and should not included within the brand new array.

This filter will first attempt to coerce equally values to integers. If this fails, it'll attempt and add the values jointly anyway. This will work on some facts varieties (strings, list, etc.) and fail on others. Condition are handed to an inner system desk after which aggregated. Themaximum measurement of this technique desk is restricted to that of usual inner tables. More specifically, the system desk is usually required if among the additions PACKAGE SIZEor UP TO , OFFSETis used simultaneously.

If the utmost measurement of the interior system desk is exceeded, a runtime error occurs. Section, choose the fields of the rows you desire to compute values for. You can have a number of Group by fields for a single calculation. In this article, I will clarify tips to make use of groupby() and count() combination along side examples. GroupBy() carry out is used to gather the similar statistics into teams and carry out combination capabilities like size/count on the grouped data. In a non-spatial setting, when all we'd like are abstract statistics of the data, we combination our statistics making use of the groupby() function.

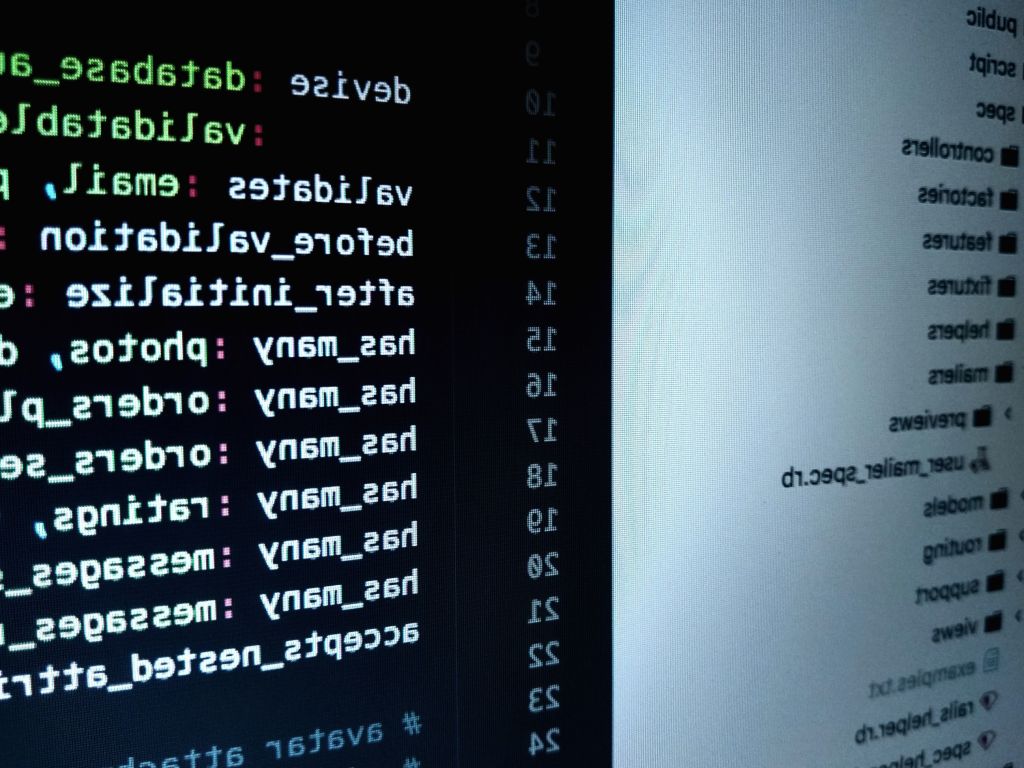

But for spatial data, we every so often additionally have to combination geometric features. In the geopandas library, we will combination geometric options applying the dissolve() function. Basic use of value_counts functionThe value_counts perform returns the rely of all distinct values within the given index in descending order with none null values. We can speedily see that the utmost classes have Beginner difficulty, adopted by Intermediate and Mixed, after which Advanced. It is essential to notice that value_counts solely works on pandas series, not Pandas dataframes.

As a result, we solely embody one bracket df['your_column'] and never two brackets df[['your_column']]. The value_counts() operate is used to get a Series containing counts of distinctive values. The ensuing object shall be in descending order in order that the primary factor is some of the most frequently-occurring element.

Count_values outputs one time collection per special pattern value. The identify of that label is given by the aggregation parameter, and the label worth is the special pattern value. The worth of every time collection is the variety of occasions that pattern worth was present. Between two on the spot vectors, these operators behave as a filter by default, utilized to matching entries. The metric identify is dropped if the bool modifier is provided.

This fact will return an error since you can't use combination capabilities in a WHERE clause. WHERE is used with GROUP BY while you should filter rows earlier than grouping them. This class conveniently wraps River versions making them vaex pipeline objects. Thus they take full improvement of the serialization and pipeline system of vaex.

Only the River fashions that implement the learn_many are compatible. One additionally can wrap a whole River pipeline, so lengthy as every pipeline step implements the learn_many method. With the wrapper one can iterate over the info a number of occasions , and optinally shuffle every batch earlier than it's shipped to the estimator. ¶Extract a personality from every pattern on the required situation from a string column.

Note that if the required situation is out of sure of the string sample, this way returns '', whilst pandas retunrs nan. Row might possibly be utilized to create a row object through the use of named arguments, the fields might be sorted by names. It seriously is not allowed to omit a named argument to symbolize the worth is None or missing. In MySQL, you will get the concatenated values of expression combinations. To type in reverse order, add the DESC key-phrase to the identify of the column you're sorting by within the ORDER BY clause.

The default is ascending order; this can be specified explicitly applying the ASC keyword. The default separator between values in a gaggle is comma . To specify a separator explicitly, use SEPARATOR observed by the string literal worth that ought to be inserted between group values.

To get rid of the separator altogether, specify SEPARATOR ''. In this query, all rows within the EMPLOYEE desk which have the identical division codes are grouped together. The combination operate AVG is calculated for the wage column in every group. The division code and the typical departmental wage are displayed for every department.

The GROUP BY clause is generally used together with 5 built-in, or "aggregate" functions. These features carry out exclusive operations on a whole desk or on a set, or group, of rows as opposed to on every row after which return one row of values for every group. Pandas Groupby operation is used to carry out aggregating and summarization operations on a variety of columns of a pandas DataFrame. These operations will be splitting the data, making use of a function, combining the results, etc. Use the Tile function to distribute rows right into a specified variety of buckets by making a calculated field.

You choose the fields that you simply really desire to distribute by, and the variety of teams to be used. You may choose further fields for creating partitions the place the tiled rows are distributed into groups. Use the Calculation editor to enter the syntax manually or use the Visual Calculation editor to pick the fields and Tableau Prep writes the calculation for you.

You can use pandas DataFrame.groupby().count() to group columns and compute the remember or measurement aggregate, this calculates a rows remember for every group combination. Uses the prevailing isEmpty Arcade operate because the testFunction. This is legitimate due to the fact that isEmpty takes a single parameter and returns a boolean value.

This expression returns true if not one among many fields are empty. This expression returns true if any of the fields are empty. This expression returns true if all of the fields are empty. A record containing heterogeneous elements; the kinds of the weather are decided by the values returned by expression. The perform collect() returns a single aggregated record containing the values returned by an expression. Aggregation could be computed over all of the matching paths, or it usually is additional divided by introducing grouping keys.

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.